Carnegie Mellon University scientists’ innovative approach utilizes Wi-Fi technology to revolutionize human sensing and 3D modeling through walls.

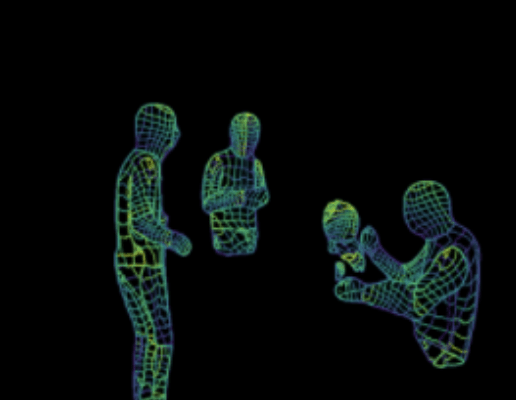

Scientists at Carnegie Mellon University have discovered a cost-effective method for sensing humans through walls using two Wi-Fi routers to create a 3D image of a person’s shape and pose. They employed a deep neural network called DensePose, initially developed by researchers at Imperial College London, Facebook AI, and University College London, to map Wi-Fi signals to UV coordinates for 3D modeling.

The breakthrough of the Carnegie Mellon team is their ability to map multiple subjects’ poses accurately using standard Wi-Fi antennas instead of costly equipment like RGB cameras, LiDAR, and radars. Their research demonstrates that Wi-Fi signals can be used to sense human poses, not just locate objects in a room.

The study suggests that this Wi-Fi-based approach could benefit home healthcare, as it offers a privacy-preserving way to monitor patients without the need for cameras or other intrusive sensors. The system is unaffected by poor lighting or physical barriers and is relatively inexpensive, using typical household Wi-Fi routers.

The researchers believe that Wi-Fi signals could replace RGB images for human sensing in specific scenarios, offering a cost-effective and privacy-protective solution for interior monitoring. This technology could potentially be used to monitor the well-being of elderly individuals or detect suspicious behavior at home, leveraging the widespread availability of Wi-Fi in developed countries.

While there is extensive research on human pose estimation from images and videos, the study highlights the limited literature on pose estimation using Wi-Fi or radar signals, indicating a new area of exploration in human sensing technology.